The year 2023 has been all about the revolution brought by Artificial Intelligence, as Microsoft-backed OpenAI, whose ChatGPT (AI-powered natural language processing tool which initiates human-like conversations and assists its users to compose emails, essays, and to do coding jobs) was launched in the fag end of 2022, has now emerged as a disruptive force in the tech sector.

In March 2023, Microsoft rolled out an AI-powered security analysis tool to automate incident response and threat-hunting tasks, showcasing a security use case for the ChatGPT. The new tool, called Microsoft Security Copilot, is powered by OpenAI’s newest GPT-4 model and will be trained on data from Redmond’s massive trove of telemetry signals from enterprise deployments and Windows endpoints.

The world of cybersecurity has already been using generative AI chatbots to simplify and enhance software development, reverse engineering and malware analysis tasks and Microsoft’s latest move emboldens the approach further. The Satya Nadella-led tech giant is already making some $20 billion a year from the sale of cybersecurity products and the latest push into AI automation will further create new revenue streams for Microsoft. Also, analysts believe that the move will start the innovation race among cybersecurity start-ups.

Understanding innovation more

The ‘Security Copilot’ chatbot will work seamlessly with security teams to allow cyber defenders to predict and analyse new challenges in their environment, learn from existing intelligence, correlate threat activities, and make better decisions at machine speed.

The chatbot has been designed to identify an ongoing cyberattack, assess its scale, and get instructions to begin remediation based on proven tactics from real-world security incidents, claimed Microsoft.

The ‘Security Copilot’ will also help in determining whether an organization is susceptible to known cybersecurity vulnerabilities, while assisting the businesses to examine the overall threat environment by the one asset-at-a-time approach. In short, the system will summarize the events of the data leak in a few minutes and prepare information in a ready-to-share, customizable report. The ‘Security Copilot’ will come integrated with Microsoft products like Sentinel, Defender and Intune to provide an “end-to-end experience across their entire security program.”

Innovation changing cybersecurity game

‘Help Net Security’ reported on how Sophos X-Ops researchers have been working on three prototype projects that demonstrate the potential of GPT-3 as an assistant to cybersecurity defenders. These projects are using a technique called “few-shot learning” to train the AI model with just a few data samples, thus reducing the need to collect a large volume of pre-classified data.

The first application Sophos’ research team tested with the few-shot learning method was a natural language query interface for sifting through malicious activity in security software telemetry. The model was tested against its endpoint detection and response product.

“With this interface, defenders can filter through the telemetry with basic English commands, removing the need for defenders to understand SQL or a database’s underlying structure. Next, Sophos tested a new spam filter using ChatGPT and found that, when compared to other machine learning models for spam filtering, the filter using GPT-3 was significantly more accurate,” stated the ‘Help Net Security’ report.

“Finally, Sophos researchers were able to create a program to simplify the process for reverse-engineering the command lines of LOLBins. Such reverse-engineering is notoriously difficult, but also critical for understanding LOLBins’ behaviour—and putting a stop to those types of attacks in the future,” the report concluded.

“One of the growing concerns within security operation centres is the sheer amount of ‘noise’ coming in. There are just too many notifications and detections to sort through, and many companies are dealing with limited resources. We’ve proved that, with something like GPT-3, we can simplify certain labour-intensive processes and give back valuable time to defenders. We are already working on incorporating some of the prototypes above into our products, and we’ve made the results of our efforts available on our GitHub for those interested in testing GPT-3 in their own analysis environments. In the future, we believe that GPT-3 may very well become a standard co-pilot for security experts,” Sophos’ principal threat researcher Sean Gallagher said.

Not everything is hunky-dory

We all know how the academic circle reacted to ChatGPT clearing exams (including difficult ones such as MBA, law and medical licensing ones). Educational institutes around the world have taken the ‘ban’ route to prevent the student community from using the AI-powered chatbot, while completing their assignments or writing tests.

Now ChatGPT faces one more ban threat. The European Data Protection Board has expressed its intention to facilitate the coordination of member states’ actions with regard to the OpenAI-developed AI chatbot, especially after Italy banned the chatbot due to concerns over privacy violations.

Spain’s data protection agency, AEPD, has initiated an investigation into OpenAI, with the argument that AI development should not infringe on individuals’ personal rights and freedoms. Germany too is mulling following a similar suit.

CNIL, the French regulatory authority, has already started a probe into ChatGPT following five complaints it received about the chatbot. One of the complainants was Eric Bothorel, a Member of Parliament, who alleged that the chatbot created false information about him. Bothorel is not alone here, as US law scholar Jonathan Turley too revealed that ChatGPT had invented a news article accusing him of sexual harassment of students during an ‘Alaska trip’.

OpenAI has been given a deadline of April 30 by the Italian authorities to comply with specific privacy requirements, and even if the Sam Altman-led venture meets the criteria, to come back online in the European country, ChatGPT and GPT-4, which reportedly “exhibits human-level performance on various professional and academic benchmarks,” have to meet the standards of GDPR, the EU’s data protection legislation, which mandates that online services must furnish precise personal information.

Developments don’t inspire confidence either

On March 20, 2023, a Redis client open-source library bug led to a ChatGPT outage and data leak, with instances leading to the users seeing each other’s personal information and chat queries. These chat queries are basically records of past queries one has made in the sidebar (in simple terms, similar to Google search history). The feature also allows the users to click on one and regenerate a response from the chatbot.

The whole problem led to ChatGPT being taken offline by OpenAI. The company’s analysis of the issues revealed that chat queries and the personal information of approximately 1.2% of ChatGPT Plus subscribers were exposed in the incident. The information included subscribers’ names, email and payment addresses, and the last four digits of their credit card numbers and expiration dates.

Then in April 2023, reports surfaced about Samsung employees leaking sensitive data to ChatGPT. After the South Korean tech giant’s semiconductor division allowed its engineers to use ChatGPT, workers leaked secret information to the chatbot on at least three occasions. While one employee asked the chatbot to check sensitive database source code for errors, another solicited code optimization and a third fed a recorded meeting into ChatGPT and asked it to generate minutes. And all these incidents led to data security breaches, forcing Samsung to restrict the length of employees’ ChatGPT prompts to a kilobyte, or 1024 characters of text, apart from building its own chatbot.

OpenAI too has urged ChatGPT users not to share secret information with the AI tool during conversations as the latter is “not able to delete specific prompts from your history.”

The news of Microsoft launching ‘Security Copilot’, using the ChatGPT ecosystem, has excited the tech geeks about the endless possibilities the innovation brings, in terms of dealing with the new and dynamic challenges in the field of cybersecurity. However, the updates of the chatbot facing legal heat in Europe over data protection concerns has turned out to be a mood dampener, followed by the fiascos on March 20 and the data leaks by the Samsung staffers.

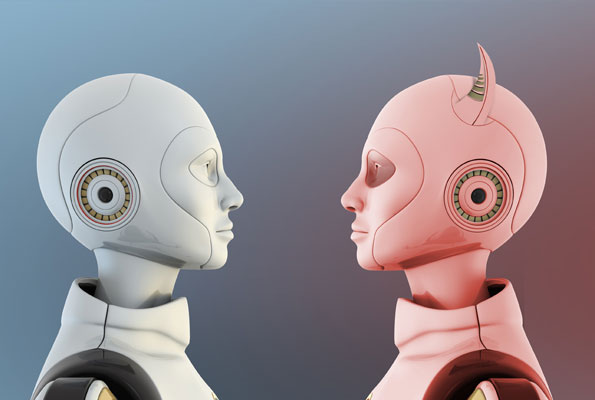

Should we trust ChatGPT and AI solutions as trusted cyber warriors? Or are these very tools themselves becoming cybersecurity threats? To get the answers, Global Business Outlook caught up with Harsh Suresh Bharwani, CEO and MD of Jetking Infotrain.

Harsh Suresh Bharwani spearheads the International Business, Dedicated Services, and Employability initiatives at Jetking. In the past decade, he has trained over 40,000 students on success, confidence, social skills, leadership, business, health, and finance. With 17 years of solid experience behind him, he is a Certified NLP Trainer & Certified Business Coach. Over the years, he has donned various hats and has excelled in various departments of the business.

Accounting, Counselling and sales, HR management, channel management, International operations, placement, and marketing are some of the areas Harsh Suresh Bharwani has worked on during his journey with Jetking. In addition to being a par excellence leader, he is known for his innovation, empathy, understanding, connecting with employees, and multitasking.

Here are the excerpts from the interview.

Q) Microsoft introduced ChatGPT capabilities to its cybersecurity business. How do you read this development?

A) Microsoft has been actively working on enhancing its cybersecurity solutions, and the introduction of ChatGPT capabilities is a significant step towards achieving this goal. ChatGPT’s ability to process natural language queries and generate contextual responses makes it a valuable tool for threat detection, incident response, and other security-related tasks. By integrating ChatGPT into its security offerings, Microsoft can improve its threat intelligence capabilities and provide more comprehensive security solutions to its customers.

Q) With the domain of cybersecurity facing new threats regularly, what are the chances for Microsoft’s AI-backed solution to succeed?

A) The cybersecurity domain is constantly evolving, and new threats emerge every day. AI-powered solutions like ChatGPT can help organizations stay ahead of these threats by detecting and responding to them quickly and efficiently. The success of Microsoft’s AI-backed solution will depend on several factors, including the effectiveness of the underlying algorithms, the quality of data used to train the model, and the ability to integrate the solution seamlessly into existing security workflows. If Microsoft can address these challenges effectively, its AI-backed cybersecurity solution could prove to be a game-changer in the industry.

Q) The banking and health sectors have been frequently targeted by cyber attackers. Will Microsoft Security Copilot be a solution for such attacks?

A) The banking and healthcare sectors are among the most targeted industries by cyber attackers. Microsoft Security Copilot, a security automation solution that leverages ChatGPT, could be a game-changer for these industries. The solution’s ability to automate security workflows, detect and respond to threats quickly, and generate contextual responses can help organizations in these sectors enhance their cybersecurity posture significantly. However, it’s worth noting that no cybersecurity solution is foolproof, and organizations must adopt a comprehensive approach to cybersecurity that includes people, processes, and technology.

Q) How generative AI-based tools will affect the cybersecurity market?

A) Generative AI-based tools like ChatGPT can have a significant impact on the cybersecurity market. These tools can automate several security-related tasks, including threat detection, incident response, and vulnerability assessment, thereby freeing up valuable resources for security teams. Additionally, generative AI can help security teams make better-informed decisions by providing real-time insights and actionable intelligence. However, it’s essential to note that these tools are not a replacement for human expertise and must be used in conjunction with human intelligence to achieve optimal results.

Q) ChatGPT has been banned in Italy due to its massive storage of personal data. Is the OpenAI product itself a data security concern?

A) ChatGPT has faced scrutiny for its data storage practices, particularly in regions with strict data privacy regulations like the European Union. In Italy, the product was banned due to concerns over its massive storage of personal data. While ChatGPT’s data storage practices may raise concerns, it’s worth noting that OpenAI has taken several steps to address these concerns. For example, OpenAI has implemented strict access controls and encryption protocols to safeguard user data. Additionally, OpenAI has collaborated with privacy experts and regulators to ensure compliance with data protection regulations.

Q) When Samsung staff entered the company’s semiconductor-related information into ChatGPT, data leaks happened. What is your take on this?

A) The incident at Samsung involved a data leak related to the company’s semiconductor business. The details of the leak are not publicly known, but reports suggest that Samsung staff had entered sensitive information into ChatGPT and that this information was later accessed by unauthorized parties. This incident highlights the importance of properly securing data when using AI systems like ChatGPT.

One possible explanation for the data leak at Samsung is that employees may have inadvertently disclosed sensitive information by inputting it into the AI model without fully understanding the risks involved. This underscores the need for companies to provide adequate training and education to employees who have access to sensitive information.

Another possibility is that the ChatGPT system itself was vulnerable to attack, perhaps due to inadequate security measures. This is a concern not only for ChatGPT but for any AI-based system that handles sensitive data. Companies must take appropriate measures to secure their systems, including conducting regular security audits and implementing robust authentication and access controls.

The incident at Samsung is a reminder that the use of AI systems like ChatGPT can introduce new security risks that must be carefully managed. While the potential benefits of AI in cybersecurity are significant, companies must take a proactive approach to manage these risks to ensure that sensitive data remains secure.

Q) Is ChatGPT a threat to cybersecurity? What are your thoughts about it?

A) As with any technology, there is always a risk of security threats when using ChatGPT. However, the risks associated with ChatGPT are not unique to the technology itself. Rather, the security of ChatGPT depends on how it is implemented and the measures put in place to protect it from attacks. Like any other AI system, ChatGPT must be carefully monitored and secured to prevent unauthorized access or malicious use. It is important for organizations to take appropriate steps to secure their systems, including regularly updating software and implementing robust authentication and access controls. Ultimately, the success of ChatGPT in the cybersecurity market will depend on how effectively it can be secured and integrated into existing security systems.